{{item.title}}

{{item.text}}

{{item.text}}

In-house actuarial platforms aren’t a new concept. What is new is the ability to deploy them at scale in a secure and compliant manner.

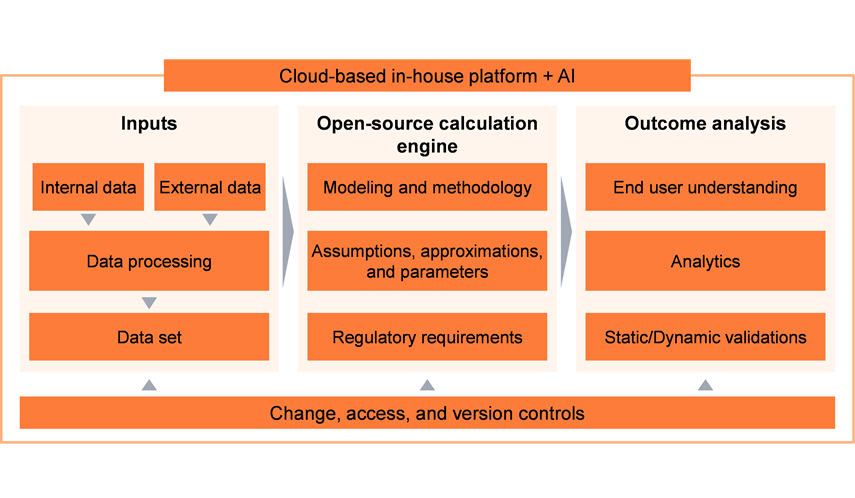

Data used to sit in silos and processing power was capped by on-hand hardware. This made in-house builds hard to scale and govern. Times have changed. Technological advancements, including centralized data and computing resources and rapidly maturing artificial intelligence (AI), are making in-house actuarial platforms increasingly attractive and feasible. It’s now possible for actuaries and their broader organizations to reduce operational complexity and lower costs by integrating models, data and analytics within a single, consistently governed environment—a “single source of the truth”—that generates effective information for timely and confident decision-making.

Today’s in-house actuarial platforms promise a unified, controlled environment for managing the actuarial lifecycle.

Thanks to their modular and scalable design, these platforms can enhance modeling agility and adaptability. You can rapidly implement product variations, address regulatory updates, and explore strategic optimization scenarios without depending on external vendor release cycles. Existing code deployment tools you probably already use can enhance error handling as well as audit trail transparency and traceability, improving explainability of results. Each assumption, dataset, calculation, and analytical step can be versioned, documented, reviewable, and auditable for internal governance and external assurance.

On top of all that, in-house platforms facilitate disciplined cost and performance management. Existing coding standards can enable you to build software with clear visibility into cloud spend (thereby facilitating accurate budgeting) and set up guardrails that help reduce bundled fees. Capacity can scale up for peak runs and scale down when idle, meaning your spend can match actual use. Workloads route to the most efficient processors for the task, central processing units (CPUs) for general calculations and graphics processing units (GPUs) for large, parallel projections. This shortens run times and increases performance, offering faster results at lower unit costs.

With today’s in-house platforms, you can scale up capacity for peak runs and scale down when idle, so spend matches actual use.

GenAI is essential for supporting model development. It can translate business specifications into executable logic, generate strong test cases, embed necessary control structures, and produce clear documentation. After model implementation, specialized AI-driven agents can help you automate routine tasks related to model maintenance and governance and ongoing operational controls.

In addition to GenAI, integrated analytics can improve insight and speed, strengthening the business case for a centralized, hybrid in-house platform. Machine learning (ML)-powered analytics offer significant enhancements beyond routine model operation. You can apply ML to improve experience analyses, detect anomalies, identify key business drivers, approximate scenarios, and evaluate alternative strategies.

Widely adopted open-source tools, such as Python, can provide a common base. But language alone isn’t enough. Feasibility comes from:

All of this improves portability across cloud environments and operational functions, making operations reliable at scale as well as facilitating training and use.

As an added bonus, there’s a large talent pool of actuaries, software engineers, and coders experienced in open-source language, giving you access to a wide range of resources who are available as temps and full- and part-timers.

AI is a key development accelerator and can enhance automated monitoring post-development.

You probably already use actuarial vendor solutions to address complex regulatory requirements such as LDTI and IFRS 17. Rather than replacing them outright, you could use an implementation strategy based on your organization’s priorities, available talent, and risk tolerance.

Here are three viable paths to quickly develop in-house capabilities where vendor solutions fall short.

Regardless of the path you choose, certain foundational principles are essential to promote effective and sustainable implementation. You should leverage structured software development life cycle (SDLC) processes for model creation, testing, deployment, and ongoing maintenance.

To promote scalability, prioritize modular, efficient model design and favor reusable components over product-specific or accounting-specific builds. This will improve adaptability and long-term cost-effectiveness. Efficient model structures that employ object-oriented programming principles, vectorized processing, and strategic GPU or CPU selection based on workloads can enhance your performance, resource utilization, and overall platform efficiency.

There are viable paths to quickly develop in-house capabilities where vendor solutions fall short.

It’s essential to confirm alignment with broader enterprise technology strategies and operational practices as defined by your CIO or CTO, including cloud infrastructure, software deployment standards, and data governance.

You should leverage established governance frameworks:

Note that these practices do not replace actuarial-specific governance.

Don’t forget to complement and strengthen existing controls, including model change control and independent validation, assumption governance, versioning of model inputs and outputs, and controlled integration of results with finance systems and reporting processes.

Furthermore, it’s critical to adopt a set of Responsible AI practices that enable clear human oversight, testing protocols, documentation standards, and performance monitoring. Defining auditability requirements up front and embedding them systematically into code, data schemas, run logs, and approval processes promotes strong governance as well as enable clear human oversight, testing protocols, documentation standards, and performance monitoring.

Defining up-front and systematically embedding auditability requirements promotes strong governance.

{{item.text}}

{{item.text}}